Author: Guo Meiting, Feng Liange

"Do you like my coat?"

On January 7, Jensen Huang appeared in a classic black leather jacket for his 90-minute "CES Solo Show."

The Nvidia founder and CEO held a Grace Blackwell NVLink72 wafer "shield" on the stage, posing as "Captain America", and he threatened to make a giant chip.

"The miracle of the Blackwell system lies in its unprecedented scale, and the Blackwell chip is the largest single chip in human history. Our ultimate goal is Physical AI. "Captain Chip" Huang said Nvidia is able to meet the needs of nearly every data center in the world.

At last year's CES, Huang did not appear in the keynote, but at this year's CES, the speech that began at 6:30 p.m. U.S. time was already lined up by hundreds of people at 4 p.m.

Source: Screenshot from Huang's speech video

After the rise of generative AI, it seems that Nvidia has not yet encountered a rival. However, the huge cake has made many technology companies eye, and on the eve of this year's CES, Nvidia's opponent entered the "trillion market value club".

It was another Chinese who stirred up the storm - Chen Fuyang, CEO of Broadcom. In recent days, ASIC (application-specific integrated circuit) has become a hot word in the chip industry, and Chen Fuyang predicts that by 2027, the market demand for customized AI chip ASICs will reach 60 billion to 90 billion US dollars.

In January this year, market rumors said that Nvidia may have established an ASIC department and plans to recruit thousands of chip design, software development and AI R&D personnel. However, industry insiders denied the above rumors to the Times Financial Reporter.

Nvidia, which has made a lot of money, is still a big fan of GPUs, but the tech giants want to get rid of their over-reliance on it. ASIC gave them hope of a breakthrough. Chen Fuyang previously said that the company is developing custom AI chips with three large cloud computing manufacturers in the United States. According to the source, Broadcom's current customers include Google, Meta, ByteDance, Apple and OpenAI, among others.

According to the Times Financial Reporter, although the current ASIC chip can meet part of the AI computing power demand, it has no substitution relationship with GPU. According to industry insiders, the AI market is huge, and it is normal for some companies to make targeted special chips. At present, many large enterprises are doing or trying to make this kind of product.

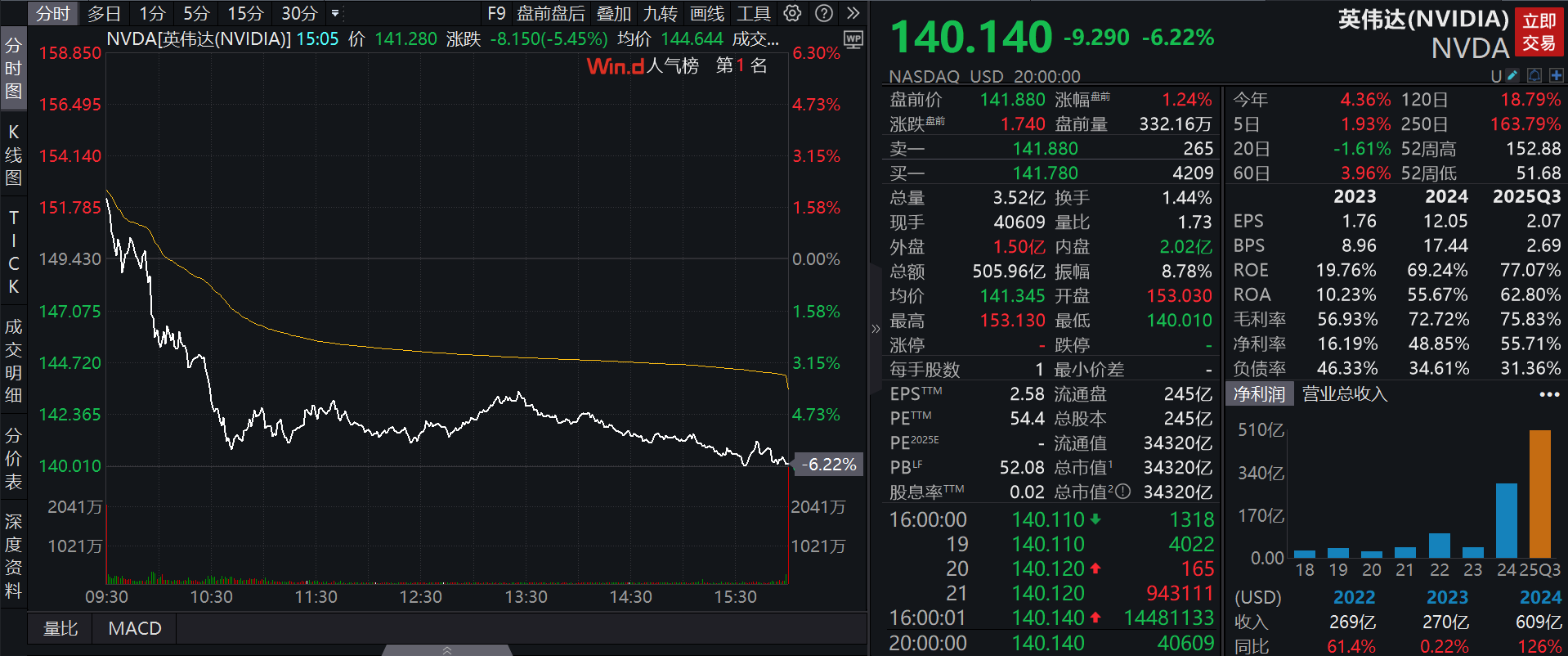

After Huang's speech, as of the close of the U.S. stock market on January 7, Nvidia fell more than 6%, and rose more than 1.2% before the press release on January 8.

Source: Screenshot from Wind

Huang's ambitions

Nvidia is still ambitious.

In March 2024, NVIDIA announced the launch of a new generation of AI chip architecture, Blackwell. It is understood that Blackwell has 208 billion transistors, more than twice the 80 billion transistors of the previous generation chip "Hopper", and can support AI models with up to 10 trillion parameters. The first chip with the Blackwell architecture is called GB200.

However, in the following months, Nvidia Blackwell chips were exposed to delayed delivery several times. According to the report, the Blackwell AI chip had serious overheating problems in high-capacity server racks, which led to design adjustments and project delays.

In November last year, at Nvidia's third-quarter 2024 earnings conference, Huang responded that the Blackwell chip has been fully put into production and is expected to be in short supply in the next few fiscal quarters.

At CES, Huang once again mentioned the situation. He said Blackwell has achieved a four-fold increase in performance compared to the previous generation. Currently, all major cloud service providers are system-ready, and 15 computer manufacturers have launched about 200 different models and configurations. These include liquid- and air-cooled versions of x86 Nvidia GPUs, as well as different types of systems such as NVLink 36x2 and NVLink 72x1 to meet the needs of virtually any data center in the world. These systems are being manufactured at approximately 45 plants.

Source: NVIDIA WeChat public account

Interestingly, Huang is also preparing to launch a giant chip. Huang held up a half-human-tall model of a chip on stage to show off to the audience.

"Our goal is to create a giant chip called Grace Blackwell NVLink72." Huang said.

According to him, the chip will use 72 Blackwell GPUs, have 130 trillion transistors, weigh up to 1.5 tons, have 600,000 components, consume 120 kilowatts, and have a "spine" behind the chip that connects all the components. The chip also contains 5,000 copper cables with a total length of 2 miles. The chip has 14 terabytes of memory and a memory bandwidth of 1.2 terabytes per second, which is basically equivalent to the amount of traffic on the Internet in the world.

More possibilities than just GPUs

In his 90-minute speech, Huang did not mention ASIC chips, as if the capital boom brought about by Broadcom some time ago had no effect on him.

Although people familiar with the matter have denied the recent rumors that Nvidia is "recruiting" for ASIC, this is not the first time that Nvidia has reported the layout of ASIC. Nvidia is building a new business unit focused on designing custom chips, including advanced AI processors, for companies such as cloud vendors, as reported by Reuters in February last year.

In June of the same year, it was reported that Nvidia CEO Jensen Huang was asked at a press conference about rumors of entering the ASIC market, and Huang said "Yes!" for the first time at that time. to confirm this decision.

Perhaps before Broadcom exploded, Nvidia had already seen the potential of ASIC chips. Regardless of whether Nvidia ultimately plans to expand this business, ASIC chips have already made a name for themselves in the AI chip industry.

According to industry insiders, chips are generally divided into three categories, one is non-ASIC chips represented by CPUs and GPUs, that is, general-purpose chips. This type of chip is designed to handle a variety of different computing tasks, with the advantage of versatility and technology ecology, and the disadvantage of high power consumption.

The second type is flexible programmable chip FPGA, and the main manufacturers include AMD, Altera, etc. This type of chip allows users to configure and reconfigure the logic functions inside the chip through coding, so it is mostly used in the field of scientific research and the pre-development stage of commercial digital products.

The third category is ASIC or XPU chips. The birth of this type of chip is because after the market demand has developed to a certain stage, the demand for certain segments is highlighted, and for these segments, chip design manufacturers have designed and developed XPU chips in a targeted manner, which not only matches the needs of these market segments, but also reduces product manufacturing costs.

"Once such ASIC chips are mass-produced, the program will be solidified in the hardware to a certain extent, which can increase the performance and efficiency by several times on the original basis, and the power consumption is much lower than that of the CPU or GPU." The above-mentioned industry insiders said.

Tech giants are also aiming for this. For example, Google pushed its own AI chip TPU (tensor processor, the representative of ASIC chips) as early as ten years ago, and this series of chips is also produced in cooperation with Broadcom. On December 12 last year, Google announced that it was officially opening up the sixth-generation TPU Trillium to Google Cloud customers. Amazon's ASIC products include Trainium and Inferentia, which are used for training and inference, respectively. Microsoft and Meta have also launched their respective ASIC products, Maia 100 and MTIA.

However, in the view of He Hui, director of semiconductor industry research at Omdia, NVIDIA's GPU, as a general-purpose product, is indispensable for large-scale computing power centers. However, different AI companies have their own core algorithms and are often better suited to run on custom hardware architectures. At this time, companies such as Broadcom, which can provide ASIC services, have become an important addition.

"For any company engaged in AI computing hardware architecture, versatility and customization are both must-have qualities." He Hui said.

Peiwen Qiu, an analyst at TrendForce, believes that ASICs are more customized for specific customers, and GPUs are usually standard products that are suitable for most customers. Moreover, compared with high-end NVIDIA chips such as the B200, there is still a large gap in the computing performance of ASIC development. As a result, ASICs and GPUs have their own target markets and applications.

Judging from the current market feedback, ASIC chips are more used as a supplement to GPUs.

Why Broadcom?

Founded in 1991, Broadcom has actually been deeply involved in the ASIC field for many years, and can be called the "big brother" in this field.

Judging from the financial report data alone, Broadcom is still in a state of increasing revenue but not increasing profits. In fiscal year 2024, Broadcom's revenue was $51.6 billion, a year-on-year increase of 44%, but its net profit was $5.895 billion, a year-on-year decrease of 58%. However, in terms of specific business, Broadcom's artificial intelligence business revenue increased by 220% year-on-year to $12.2 billion, driving the revenue of the semiconductor business to a record high of $30.1 billion.

Chen Fuyang was optimistic in the earnings call: "We currently have three hyperscale customers who have developed their own roadmap for multiple generations of AI XPU, which they plan to deploy at different speeds over the next three years. We believe that by 2027, each of them plans to deploy 1 million XPU clusters on a single architecture. ”

He Hui believes that Broadcom's strength lies in "connectivity". "In the era of AI, computing power and interconnection technology play a crucial role." She said that Broadcom has strong capabilities in interface chips and has accumulated many years of rich experience in the field of computing chips, so it can effectively combine these two key technologies to provide customers with advanced accelerated computing solutions. That's why NVIDIA has been actively promoting NVLink technology.

Dai Wenliang, founder and president of Xinhe Semiconductor, said, "Broadcom has launched 3.5D F2F (Face-to-Face) technology, which can significantly improve the interconnection density, power efficiency and performance of chips. ”

At the end of last year, Broadcom announced the launch of the 3.5D eXtreme Dimension system-in-package (XDSiP) package platform technology. This is the industry's first 3.5D F2F packaging technology, which integrates more than 6000mm2 of silicon chips and up to 12 HBM memory stacks in a single package to meet the high-integration, high-power, and energy-efficient computing needs of AI chips.

Dai Wenliang told the Times Financial Reporter that 3.5D F2F packaging technology is an architectural innovation, and before that, the industry's more common advanced packaging technology is either 2.5D packaging or placed below through a bridge chip. 3.5D F2F packaging may not be the best solution, but it provides the industry with another way to solve the current pain points.

Dai Wenliang further added that at present, the demand for AI computing power in the industry is skyrocketing, and NVIDIA's general-purpose GPU is hard to find, so the energy efficiency ratio is becoming more and more important. The flexibility of general-purpose GPUs to juggle multiple types of computing tasks inevitably comes at the expense of performance and efficiency in specific applications, such as video processing, network communications, deep learning, etc., especially under high loads or in constant operation. Because the ASIC chip is specially customized for a specific application, this is an advantage in itself, under the same working conditions, Broadcom's ASIC chip can achieve a significant increase in performance, and the computing power is actually very strong, which is more suitable for applications that require accurate and efficient processing. "This kind of competitive mentality is also worth encouraging, and the industry will improve performance through a hundred flowers of innovation, rather than endlessly rolling up and lowering prices."

After Broadcom, who else?

"It's good for us." After Broadcom became an instant hit, some domestic industry insiders engaged in ASIC chips told the Times Financial Reporter that Broadcom raised the historical status of XPU, which made them feel encouraged.

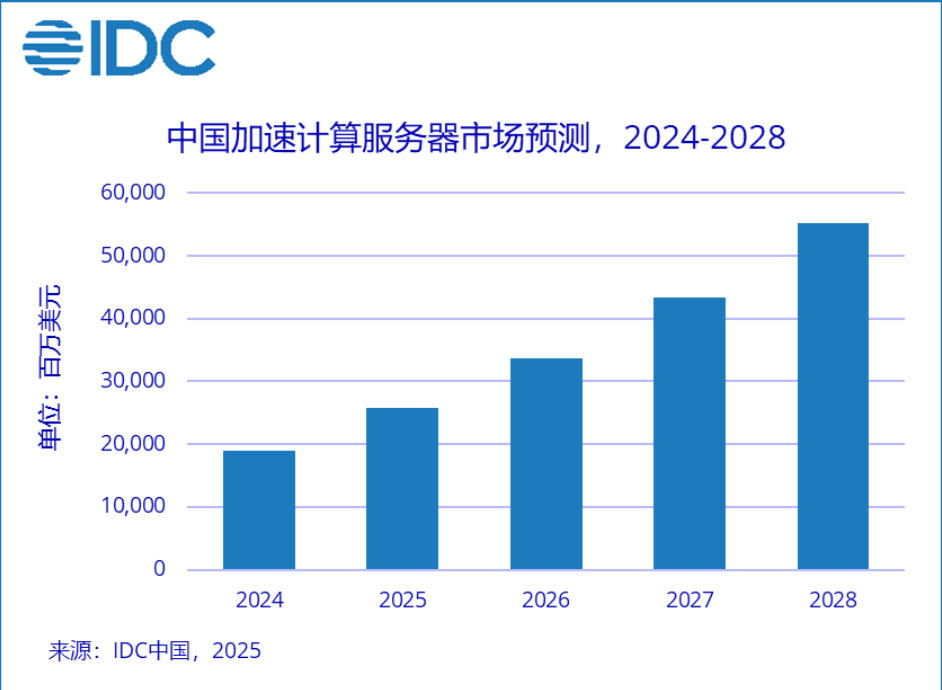

On January 3, IDC, a third-party data agency, released the latest accelerated computing server market forecast data, showing that the size of China's accelerated server market will be 19 billion US dollars in 2024, an increase of 87% year-on-year in 2023. Among them, GPU (graphics processing unit) servers are still dominant, accounting for 74% of the market share. However, by 2028, China's accelerated computing server market will exceed $55 billion, of which ASIC accelerated servers will account for nearly 40% of the market.

Source: IDC WeChat public account

Perhaps in the future, ASICs will grow rapidly in market share, but they will not be able to replace general-purpose processors.

Qiu Peiwen said that in addition to using NVIDIA GPUs, cloud companies will also actively develop their own ASIC chips. This not only allows you to customize your application, but also reduces your reliance on NVIDIA chips and reduces your spending costs. Broadcom itself is an IC design company, and also provides IC design foundry services to customers, whether it will have an impact on other chip factories mainly depends on whether customers want to develop their own ICs, and then replace the original suppliers.

The above-mentioned industry insiders believe that whether ASIC chips can operate alone depends on the application scenario. For example, if a data center is to be built in a certain place, if it only serves AI computing tasks in the field of scientific research, then customized ASIC chips can meet this demand with more efficient computing power utilization based on lower power consumption and dedicated features. However, if the data center also needs to handle tasks such as traffic and security, then GPUs are preferred. The service object determines the choice of chip type.

"When a market segment shows great potential, there will inevitably be dedicated chips. Because the market size in this field is large enough, it is worth investing resources for enterprises to develop special chips, reduce costs through mass production, give full play to efficient utilization, and seize market share. This is the market meaning behind ASIC chips. The industry insider said.

At present, many domestic AI chip manufacturers have chosen the ASIC direction. For example, the unicorn company Zhonghao Xinying focuses on domestic TPU chips and their solution tracks, which is also one of the ASIC chips, and in the second half of 2023, Zhonghao Xinying's self-developed GPTPU architecture high-performance artificial intelligence chip has achieved mass production in an instant. According to reports, in the second half of 2024, on the one hand, the company will land more intelligent computing center projects, and on the other hand, it is also strengthening ecological construction, further optimizing the software platform, and creating a combination of software and hardware integration, making it more suitable for rapid deployment and independent use by domestic enterprises.

In addition, the direction of the first Cambrian-U (688256.SH) of AI chips is also ASIC. According to Wind data, Cambrian's share price has risen 480.34% in the past year, and as of the close of trading on January 8, Cambrian rose 1.11% to 726 yuan per share, with a market value of more than 300 billion yuan.

In Dai Wenliang's view, in the future, there will be more and more AI applications and small-parameter models in small scenarios. "Most of the time, hundreds of billions of parameters and 10,000 card clusters are games for a few manufacturers, and the implementation of most functions and scenarios does not require hardware support of this magnitude." In addition, the concept of device-side AI, AI PCs and mobile phones is getting more and more attention, which actually confirms this trend. Therefore, ASIC customized chips can be said to be a "cost-effective" choice.

Ticker Name

Percentage Change

Inclusion Date